|

上个学期的neuroinformatik(神经信息学)中有一个章节介绍的是hopfield网络。

a Hopeld net is a neural network with feedback, i.e. the output of the net at time t becomes the input of the net at time t + 1. e.g.The output of neuron j at time t + 1 is given by where theta is the threshold of neuron j, N is the number of neurons in the Hopeld net.

If the weights are initialized suitably, the Hopeld net can be used as an autoassociative memory that recognizes a certain number of patterns. When presented with an initial input, the net will converge to the learned pattern that most closely resembles that input. To achieve this, the weights need to be initialized as follows: Where vector x are the patterns to be learned.

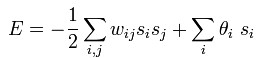

Hopfield nets have a scalar value associated with each state of the network referred to as the "energy", E, of the network, where:

简单的说就是,所学习的”知识“并非存储在神经元内,而是保存在神经元间的连接中。而某个神经元的输出则取决于与其相连的神经元的输入。这和我们人类的记忆机能是很类似的。

附,突然想到一个比方:科学不是全局最优解,上帝是。科学只是目前的局部最优解,是在有限的时间有限的空间下所能找到的最优解。在我们不是上帝的时候,科学提供的答案正如这局部最优解,是你只能接受的现实。而要想跳出局限性,我们需要不时的随机重新设定搜索的出发点。

|